Explainable AI in Triage: Making Black Box Decisions Transparent

The topic of explainable AI (XAI) in triage, particularly in making black box decisions transparent for clinicians, is a critical area of exploration. Let's begin by understanding the significance of this topic and then delve into the various aspects that make it essential for modern healthcare.

The integration of Artificial Intelligence (AI) into critical decision-making domains, particularly healthcare, presents both transformative opportunities and significant challenges. While AI systems offer remarkable capabilities in processing vast datasets and identifying complex patterns, their inherent opacity, often referred to as the "black box" problem, can undermine trust and accountability. This concern is particularly acute in high-stakes environments like medical triage, where rapid, accurate, and justifiable decisions directly impact patient outcomes. Explainable AI (XAI) emerges as a crucial solution, aiming to demystify these complex systems and foster confidence in AI-driven processes.

1.1 The Critical Role of Triage in High-Stakes Environments

Triage, derived from the French term "trier" meaning "to sort," is a fundamental process in emergency medicine and other critical services. It involves the rapid assessment, sorting, and prioritization of individuals based on the severity of their condition and the urgency of their need for care, especially when resources are limited. In hospital emergency departments, disaster zones, or on battlefields, triage acts as a life-saving mechanism, ensuring that patients with life-threatening conditions receive immediate attention, thereby optimizing resource allocation and enhancing patient outcomes.

The core objectives of triage include the swift identification of life-threatening conditions, effective resource allocation, and the enhancement of patient outcomes through prompt intervention. Historically, triage has evolved from military medicine to encompass civilian emergency care, guided by principles of immediacy, fairness, and efficiency. Modern triage systems consider various factors such as main complaint, vital signs, consciousness level, pain intensity, and health history to categorize patients into priority levels, typically using a color-coded system (e.g., Red for immediate, Yellow for delayed, Green for minor). The effectiveness of triage directly correlates with preventing avoidable deaths, reducing hospital stays, lowering healthcare expenses by averting complications, and improving overall emergency department efficiency.

Beyond medicine, the concept of triage is also vital in cybersecurity and IT incident management. Here, it involves rapidly assessing and prioritizing alerts and incidents based on risk and urgency to focus on critical threats, streamline response, and prevent minor issues from escalating into full-blown incidents. Whether in healthcare or cybersecurity, the underlying principle remains the same: to make swift, informed decisions under pressure to allocate limited resources effectively and mitigate potential harm.

1.2 The "Black Box" Problem in AI: Opaque Decisions and Their Risks

Many advanced AI systems, particularly deep learning models, function as "black boxes". This means their internal decision-making processes are not easily interpretable or transparent to human users. While these models demonstrate remarkable capabilities in tasks like large-scale data analysis and pattern recognition, their opaque nature poses significant challenges, especially in sensitive domains like healthcare, criminal justice, and finance. The inability to understand the system's logic makes meaningful human intervention difficult and raises concerns about preventing harm.

The "black box" problem stems from how these models are built: they learn from vast amounts of data by finding complex patterns that often defy human comprehension, even for their designers. This opacity can lead to lower stakeholder trust, as users cannot verify or understand the reasoning behind AI outputs. In healthcare, for instance, an AI model might suggest a diagnosis from a scan but offer no explanation that doctors can verify, hindering validation and treatment justification. This lack of transparency also complicates bias detection, ethical compliance, and debugging efforts, making it challenging to identify why a model might fail or behave unexpectedly. The ethical and regulatory implications are profound, as decisions impacting lives are made without clear accountability or the ability to scrutinize the underlying rationale.

1.3 Introducing Explainable AI (XAI): Bridging the Gap to Trust

Explainable AI (XAI) is a crucial field that addresses the "black box" problem by making AI systems more understandable and interpretable to humans. At its core, XAI aims to provide insights into how AI arrives at its conclusions, allowing users to verify, trust, and refine these systems. This is particularly vital in high-stakes industries like healthcare, where AI decisions must be explainable to meet regulatory and ethical standards. XAI is not merely an add-on; it is increasingly recognized as a necessary feature of trustworthy AI, bridging the gap between complex AI models and their application domains. By enhancing transparency, XAI seeks to build confidence in AI-powered decision-making, ensuring that these powerful tools can be deployed responsibly and effectively.

2. Understanding Explainable AI (XAI)

Explainable AI (XAI) refers to a set of processes and methods designed to allow human users to comprehend and trust the results and output generated by machine learning algorithms. It helps characterize model accuracy, fairness, transparency, and outcomes in AI-powered decision-making. XAI is a key requirement for implementing responsible AI, which emphasizes fairness, model explainability, and accountability in the large-scale deployment of AI methods.

2.1 Defining XAI: Core Principles and Components

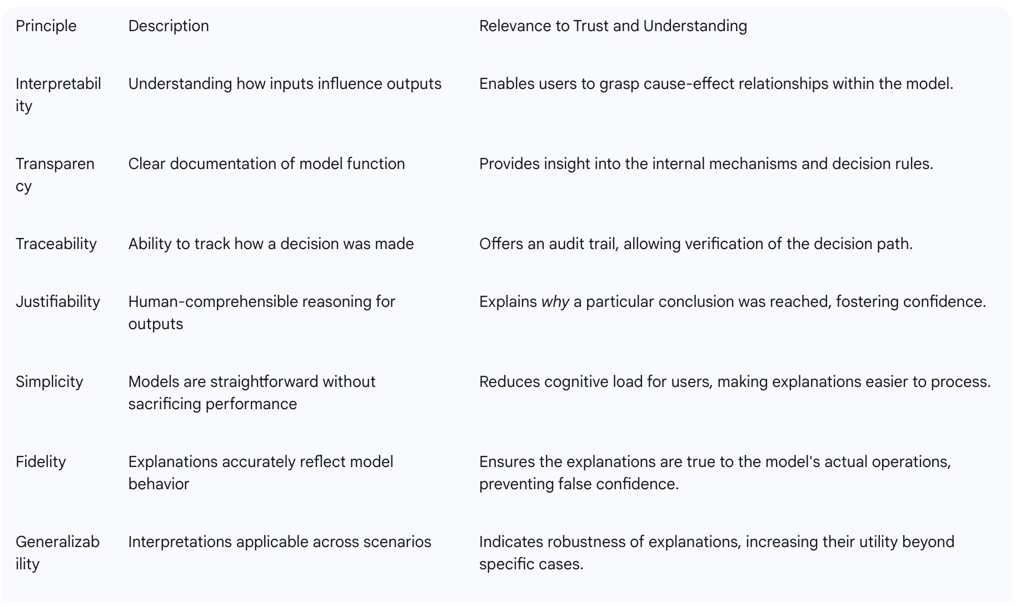

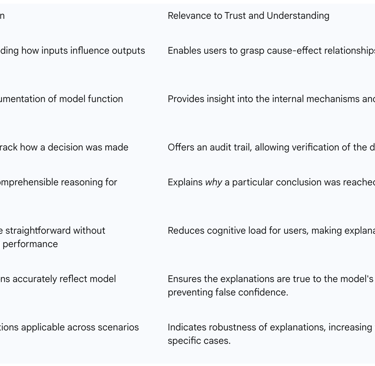

An AI system is considered explainable if it includes several key components that enhance human understanding of its operations. These components contribute to its ability to provide clear, justifiable reasoning for its outputs.

Interpretability: This refers to the ability to understand how inputs influence outputs. It allows users to grasp the relationship between the data fed into the model and the predictions it generates.

Transparency: This involves clear documentation of how a model functions, including its internal mechanisms and decision rules. It means making the inner workings of the AI system accessible and comprehensible.

Traceability: This is the ability to track how a specific decision was made, providing an audit trail of the AI's reasoning process. It allows users to follow the path from input to output.

Justifiability: This is the capability to provide human-comprehensible reasoning behind the AI's outputs, offering clear explanations for its conclusions.

For AI to be truly explainable, it must adhere to key interpretability principles :

Simplicity: Models should be as straightforward as possible without sacrificing performance, making them easier to understand.

Fidelity: Explanations provided by the XAI system must accurately reflect the model's actual behavior, ensuring that the explanation is not misleading.

Generalizability: Interpretations should be applicable across different scenarios and datasets, indicating that the explanations are robust and not specific to a single instance.

These principles collectively build trust in AI decision-making by making the AI's reasoning clear and accountable.

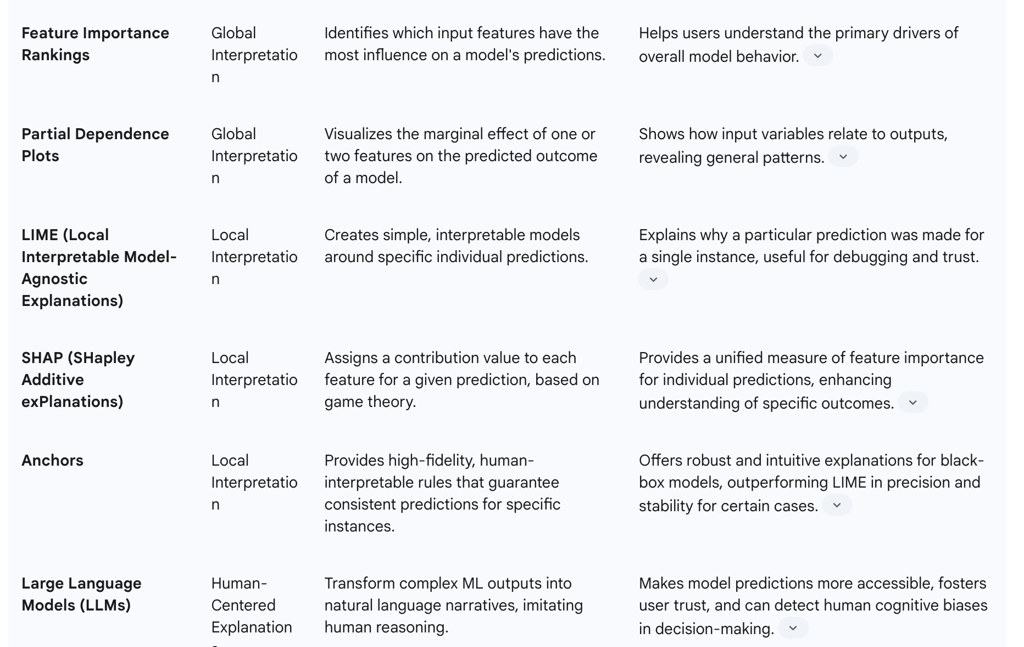

Table 1: Key Principles of Explainable AI (XAI)

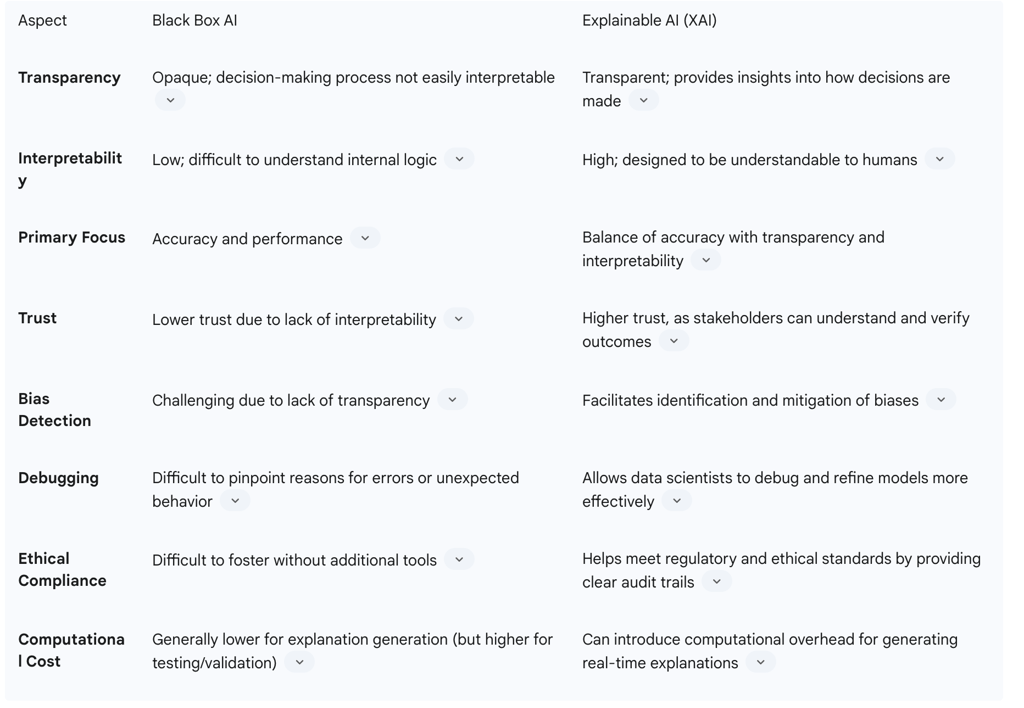

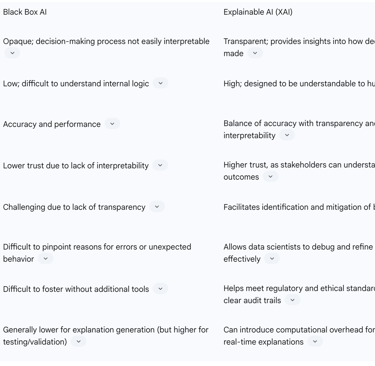

2.2 Distinction from Traditional AI and Black Box Models

Traditional AI models often prioritize accuracy and performance, particularly in complex tasks, but frequently lack interpretability. These "black box" models, such as deep neural networks, analyze data and produce outcomes without revealing the logic behind their predictions, making their underlying reasoning opaque. This opacity can undermine trust and accountability, especially in sensitive areas like healthcare where understanding the rationale behind decisions is critical.

Explainable AI, in contrast, balances accuracy with transparency, ensuring that stakeholders can understand and trust its decisions. While black box AI excels in handling vast datasets and learning complex patterns, its challenging bias detection and ethical compliance often necessitate additional tools or frameworks. XAI, on the other hand, aims for higher trust by enabling stakeholders to understand and verify outcomes. This distinction is critical in high-stakes industries where regulatory and ethical standards demand explainable AI decisions. The goal is to move towards "glass-box" AI systems where transparency is built in, rather than attempting to explain an inherently opaque system after the fact.

2.3 Key Techniques for Achieving Explainability (e.g., LIME, SHAP, LLMs for XAI)

To make AI systems more transparent, various technical approaches and interpretability concepts are applied. These techniques help uncover how AI systems make decisions across different stages of their lifecycle.

Global Interpretation Approaches: These methods explain how a model works overall. Techniques include:

Feature Importance Rankings: Identifying which input features most influence predictions, helping stakeholders understand the primary drivers of AI outcomes.

Partial Dependence Plots: Visualizing the relationship between input variables and outputs, showing how a change in a feature affects the predicted outcome.

Local Interpretation Strategies: These focus on explaining individual predictions, providing insights into why a specific input led to a particular output. Key methods include:

LIME (Local Interpretable Model-Agnostic Explanations): This technique creates simple, interpretable models around specific predictions. It explains the prediction of classifiers by the machine learning algorithm, often used for prediction accuracy assessment. LIME has been shown to achieve better quantitative scores in some evaluations.

SHAP (SHapley Additive exPlanations): This method assigns contributions to each feature for a given prediction, based on game theory. It helps explain decisions by measuring the model's focus on specific areas or features. SHAP is often favored for explaining decisions based on an explainability score.

Anchors: A model-agnostic method that provides high-fidelity, human-interpretable rules ensuring consistent predictions for specific instances. Anchors have been shown to outperform LIME in precision and stability, offering robust and intuitive explanations for black-box models.

Model-Agnostic Explanation Tools: Frameworks like SHAP and LIME can be applied to any model type, making them widely useful for enhancing AI transparency regardless of the underlying AI architecture. Other methods include rule extraction, visualization, surrogate models, and saliency maps.

Large Language Models (LLMs) for XAI: LLMs offer a promising approach to enhancing XAI by transforming complex machine learning outputs into easy-to-understand narratives. They can make model predictions more accessible to users and help bridge the gap between sophisticated model behavior and human interpretability. LLMs are capable of imitating human decision-making and effectively understanding "free-text" clinical data, which is prevalent in emergency departments. This capability allows them to detect and anticipate human cognitive biases, such as gender bias in triage decisions, by reproducing and measuring caregiver biases.

2.4 The Spectrum of Interpretability: From Inherently Transparent to Post-Hoc Explanations

The field of XAI recognizes a spectrum of interpretability, ranging from models that are inherently transparent ("white box" or "glass-box" models) to those that require post-hoc explanation techniques for their "black box" nature.

Inherently Transparent Models (White Box/Glass Box AI): These models are designed from the outset to provide full transparency in their decision-making processes. Examples include linear regression and decision trees, where the logic is clear and directly understandable by humans. The advantage here is that transparency is built-in, potentially leading to higher trust and easier debugging. However, simpler models may underperform on complex tasks where highly accurate predictions are paramount.

Post-Hoc Explanations: For complex "black box" models like deep learning networks, which prioritize accuracy but lack intrinsic interpretability, post-hoc explanation techniques are applied after the model has been trained and deployed. These methods, such as LIME and SHAP, analyze the model's outputs to infer and present explanations. While valuable, post-hoc explanations are approximations and may not fully capture the model's true reasoning, running the risk of over-simplifying or misrepresenting complicated systems. There is also a trade-off between accuracy and interpretability; complex models are accurate but harder to explain, while simpler models are interpretable but less accurate. The challenge lies in balancing the performance benefits of black box models with the transparency advantages offered by explainable systems. The ultimate goal is to shift AI development from "interpretability as an add-on" to "interpretability by design," ensuring transparency is foundational rather than an afterthought.

3. XAI in Medical Triage: Benefits and Applications

The application of Explainable AI in medical triage holds significant promise for transforming emergency department operations and improving patient care. By making AI's decision-making transparent, XAI can enhance efficiency, foster trust, and support healthcare professionals in high-pressure environments.

3.1 Enhancing Patient Prioritization and Resource Allocation

AI-driven triage systems can automate patient prioritization by analyzing real-time data, including vital signs, medical history, and presenting symptoms. This allows for a more objective and rapid assessment and categorization of patients compared to traditional systems that rely solely on real-time clinician evaluation. XAI specifically helps triage nurses and physicians make better decisions about patient priority and resource allocation by clearly explaining risk assessments. When an AI system can explain why one patient should be seen immediately while another can safely wait, it helps staff make confident triage decisions even during busy periods.

For instance, an AI system can prioritize patients not only based on their presenting symptoms but also by considering historical data, such as a history of cardiovascular disease, which might indicate a higher risk of complications. This improves the accuracy and relevance of triage outcomes. Furthermore, AI-driven triage systems can manage resources more effectively, especially during high-volume periods, by automatically adjusting patient prioritization based on real-time conditions and resource availability. This ensures that critical resources like staff, beds, and diagnostic tools are allocated more efficiently, contributing to reduced wait times and optimized patient flow.

3.2 Improving Diagnostic Support and Personalized Treatment Plans

XAI systems can significantly enhance diagnostic support in emergency settings by processing vast amounts of data instantly while providing clear reasoning that helps physicians make confident decisions quickly. These systems analyze multiple data streams—from vital signs and lab results to imaging studies and patient history—to offer diagnostic support. By showing which symptoms, test results, and patient factors led to specific recommendations, XAI enables physicians to focus their attention on the most critical aspects of each case.

For complex cases where multiple diagnoses are possible or treatment decisions involve numerous competing factors, XAI systems can outline different diagnostic possibilities and the evidence supporting each. This is particularly valuable when dealing with multiple comorbidities, drug interactions, or situations where standard protocols may not clearly apply. Moreover, XAI systems can help identify personalized treatment approaches by clearly showing how individual patient characteristics influence treatment recommendations. This transparency allows physicians to understand not just what treatment is recommended, but why it is appropriate for that specific patient at that specific time. This ability to provide immediate access to relevant patient data and the latest medical knowledge empowers healthcare professionals to navigate complex and voluminous data, ensuring that critical decisions are both informed and timely.

3.3 Fostering Clinician Confidence and Patient Trust

A primary benefit of XAI in emergency medicine is its ability to build trust among both clinicians and patients. Users and stakeholders are more likely to trust AI systems when they understand how decisions are made. XAI fosters confidence by making the AI's reasoning clear and accountable. For clinicians, XAI helps them grasp the rationale behind AI-generated diagnoses and recommendations, ensuring informed decision-making that considers both AI insights and clinical judgment. This is crucial for medical staff to check AI suggestions and feel confident in acting swiftly and decisively based on AI-assisted conclusions.

For patients and their families, understanding the reasoning behind medical decisions increases their likelihood of trusting the care they receive and complying with treatment recommendations. Clear explanations of how AI works help healthcare staff and patients trust the system, promoting patient autonomy and consent by ensuring individuals are aware if AI is used in handling their calls and how their data is managed. This transparency is essential for gaining the trust of healthcare providers and ensuring that the systems' recommendations can be effectively scrutinized and validated.

3.4 Case Studies and Real-World Impact

While full-scale implementations are still evolving, several initiatives and studies highlight the potential and necessity of XAI in medical triage and emergency services:

AI for ER Overcrowding: AI systems are being developed to analyze historical data, weather patterns, and local events to forecast patient surges in emergency rooms, allowing hospitals to adjust staffing and resources proactively. AI-powered triage tools assess patient symptoms and vital signs in real-time to prioritize critical cases, ensuring life-threatening conditions are not delayed. This aims to reduce wait times by automating parts of the triage process and streamlining patient flow, ultimately supporting overworked staff by taking over repetitive tasks and providing actionable insights.

Bias Detection in Triage: Research has demonstrated how Large Language Models (LLMs) trained on patient records can reproduce and measure caregiver biases, such as gender bias, during triage. In one study, an AI model trained to triage patients based on clinical texts was able to identify that, based on identical clinical records, the severity of women's conditions tended to be underestimated compared to men's. This case study exemplifies how generative AI can help detect and anticipate human cognitive biases, paving the way for fairer and more effective management of medical emergencies.

Emergency Room Optimization Systems: Proposed AI-driven decision support systems integrate real-time capacity monitoring with Electronic Health Records (EHRs) to track bed availability and physician workload. Such systems utilize Natural Language Processing (NLP) for triage automation, classifying cases into priority tiers, and have shown potential in reducing ICU admission prediction errors and wait times. Global evidence, including deep learning models in Seoul that improved ICU admission predictions by 34% and early physician triage protocols in Colombia that reduced Emergency Department Length of Stay (EDLOS) by 22%, validates the viability of AI-driven solutions for optimizing ER operations. These applications demonstrate AI's potential to prevent real-world tragedies by rerouting patients to available specialists or preparing for critical cases based on predictive analytics.

These examples underscore that while AI offers substantial benefits in improving patient prioritization, reducing wait times, and optimizing resource allocation, the integration of XAI is crucial to address challenges like data quality issues and algorithmic bias.

4. Navigating the Challenges: Ethical, Regulatory, and Technical Considerations

The deployment of AI in high-stakes environments like medical triage, while promising, is fraught with significant ethical, regulatory, and technical challenges. Addressing these concerns is paramount for ensuring responsible and trustworthy AI implementation.

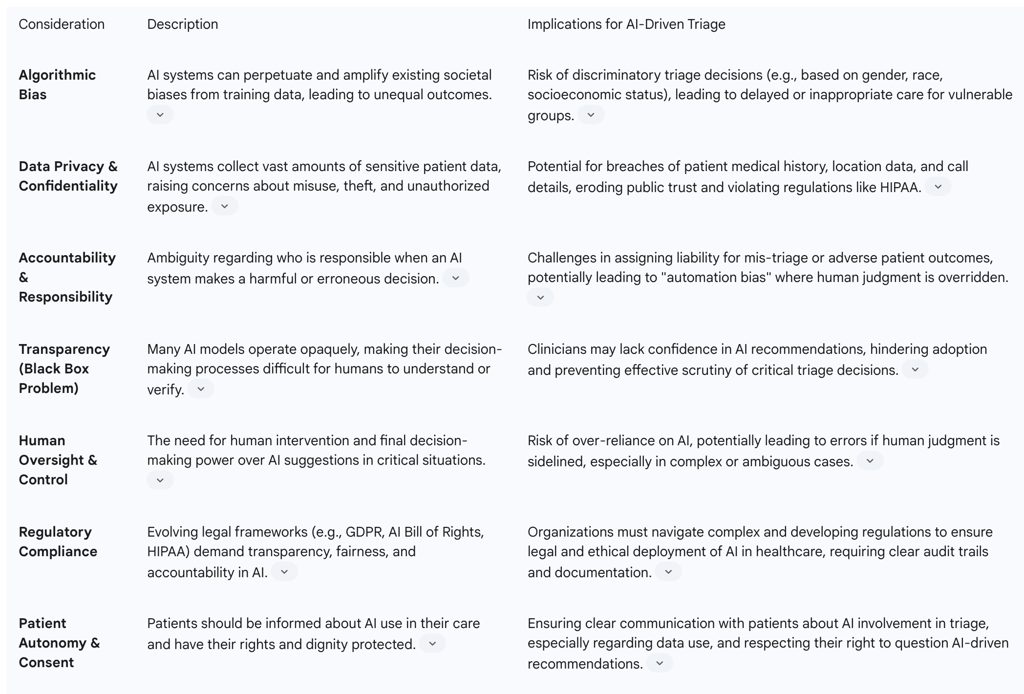

4.1 Addressing Algorithmic Bias and Ensuring Fairness

One of the most pressing ethical concerns in AI decision-making is algorithmic bias. AI systems learn from the data fed into them, and if that data reflects existing societal prejudices or inequalities, the AI will inadvertently perpetuate and even amplify these biases. In healthcare, this can lead to unequal outcomes for certain demographic groups, undermining trust and potentially creating a two-tiered healthcare system. For example, AI algorithms used to predict hospital readmission rates have been found to underestimate risk for underrepresented minority groups due to biased training data. Similarly, diagnostic tools trained primarily on images of lighter skin tones may be less accurate in diagnosing conditions in patients with darker skin.

Biases can also intersect with Social Determinants of Health (SDOH), leading to unequal access, lower-quality care, and misdiagnosis in marginalized populations. An algorithm using healthcare costs as a proxy for medical need, for instance, introduced implicit racial bias because less is typically spent on Black patients, leading to under-prioritization of their care. Identifying and mitigating biases requires proactive measures, including building fairness into AI systems from the ground up, using diverse and representative datasets for training, and establishing clear ethical guidelines. Regular audits of AI systems are crucial to find and fix any unfair results, and multidisciplinary teams involving ethicists, social scientists, data scientists, and medical professionals are essential during AI development to lower bias risks.

4.2 Upholding Data Privacy and Patient Confidentiality

AI systems in emergency services often collect vast amounts of private and sensitive information, including medical history, exact location, and details from emergency calls. The potential for misuse or theft of this data poses significant risks to individual privacy and can erode public trust in health systems. Regulatory frameworks, such as the Health Insurance Portability and Accountability Act (HIPAA) in the U.S. and GDPR for international services, mandate the protection of health information. However, laws about AI and data protection are still developing, creating gaps in coverage regarding transparency, cybersecurity, fairness, and responsibility.

Healthcare facilities and emergency providers must implement strong technical protections, including encryption, data anonymization, and regular security checks, to safeguard AI data. It is also critical that patients are informed if AI is used in handling their calls, especially if their data is stored or shared, ensuring patient autonomy and consent. Continuous monitoring and open communication between developers, healthcare workers, and regulators are necessary to prevent misinformation and unethical data use.

4.3 Establishing Accountability and Human Oversight

Accountability is a key ethical concern in healthcare AI, defining responsibility for AI-driven decisions in patient care. Unlike traditional medicine where clinicians are primarily accountable, AI involves multiple stakeholders, including developers, providers, and institutions. Errors or unsafe recommendations complicate accountability, especially when AI systems are opaque or lack documentation. Without clear accountability, patient safety risks increase, and trust erodes.

There is a risk of misalignment of responsibility, where AI developers may prioritize monetary consequences over ethical considerations, and medical professionals might inadvertently place patients at risk due to a subconscious feeling of immunity from the AI system. This phenomenon, known as "automation bias," involves blindly accepting AI results, raising concerns about human culpability. Robust frameworks are essential to ensure stakeholders prioritize ethical conduct and patient well-being. This includes ensuring AI systems have safety measures that allow humans to intervene at any time, with final decisions always made by human dispatchers or responders, thereby avoiding mistakes caused by over-reliance on AI. Organizations must also promote transparency about AI limitations and uncertainties to avoid over-reliance and foster an environment where clinicians feel empowered to question and override AI recommendations when appropriate.

4.4 Balancing Performance with Interpretability: Technical Trade-offs

A significant technical challenge in XAI is the inherent trade-off between model performance (accuracy) and interpretability. Complex models, such as deep neural networks, often achieve high accuracy but rely on thousands of nonlinear interactions that are difficult to translate into human-interpretable rules. Simpler, inherently interpretable models like decision trees may be easier to explain but might underperform on complex tasks, leading to a tension where stakeholders demand both high accuracy and clear explanations, which is rarely feasible with current methods.

Generating real-time explanations for large models can introduce computational overhead, potentially slowing down systems in production, which is a critical concern in time-sensitive environments like emergency triage. Furthermore, evaluating the quality of explanations is challenging, as there is no universally agreed-upon metric to measure if an explanation is "correct". Explanations can also be context-dependent and subjective, requiring tailoring to diverse audiences, which increases development complexity and validation efforts. There is also the risk that plausible-sounding reasons provided by XAI might create false confidence, leading users to overtrust a model even if the explanations are incomplete or misleading. Addressing these issues requires careful design, ongoing validation, and clear communication about the limits of XAI tools.

4.5 Regulatory Landscape and Compliance Requirements

Regulations like the EU's GDPR and the U.S.'s AI Bill of Rights demand transparency in AI-driven decisions, highlighting the growing need for explainable AI to ensure compliance. In healthcare, AI tools must follow federal rules like HIPAA and other privacy laws, as well as industry standards from organizations like ANSI/CTA and NENA. The World Health Organization (WHO) also emphasizes that AI should be clear and well-documented, with all steps of software development, including data used for training, updates, and decision processes, being recorded.

The regulatory landscape for AI is still evolving, and gaps in laws create risks concerning transparency, cybersecurity, fairness, and responsibility. This necessitates that healthcare managers choose AI products that come with detailed information from developers and adhere to established protocols that prevent AI from replacing human judgment. The challenge lies in developing scalable, automated approaches that can adapt to evolving regulatory requirements while maintaining interpretability and reliability. Ultimately, fostering responsible AI use in clinical settings requires aligning technological innovation with human-centered values, legal safeguards, and professional accountability.

Table 4: Ethical and Regulatory Considerations in AI-Driven Triage

5. Towards a Transparent Future: Recommendations for Responsible AI in Triage

Achieving a future where AI responsibly enhances medical triage requires a concerted effort to embed transparency, fairness, and accountability into every stage of AI system development and deployment. The following recommendations outline a path forward for integrating Explainable AI in high-stakes environments.

5.1 Prioritizing "Interpretability by Design"

Instead of attempting to explain opaque "black box" models after they are built, the focus should shift towards designing AI systems with inherent interpretability from the outset. This means prioritizing models that are intrinsically transparent, where their decision-making logic is clear and understandable without requiring complex post-hoc explanations. While there is a trade-off between simplicity and performance, selecting the most interpretable model that still achieves good predictive results is generally advisable for critical applications like triage. This approach ensures that transparency is a foundational element, fostering higher trust and easier debugging from the ground up.

5.2 Implementing Robust Bias Detection and Mitigation Strategies

Given the significant risk of algorithmic bias, proactive and continuous strategies for detection and mitigation are essential. This involves building teams with diverse backgrounds and perspectives to inform AI development, establishing clear ethical guidelines, and developing frameworks to continually assess and improve the fairness of AI algorithms. Training data must be representative of the diverse populations the AI will serve, with active work to identify and minimize subtle biases. Regular audits of AI systems, focusing on performance across different demographic groups, should be established to identify and rectify any unfair outcomes. This commitment to equitable outcomes is crucial for preventing AI from perpetuating or amplifying existing healthcare disparities.

5.3 Strengthening Data Governance and Security Protocols

The extensive collection of sensitive patient data by AI systems necessitates robust data governance and cybersecurity measures. Healthcare facilities and AI providers must implement strong technical protections, including encryption, anonymization, and secure storage, to safeguard patient information. Regular security testing, including stress testing and vulnerability assessments, should be routine to identify and mitigate risks of adversarial attacks or malicious inputs. Clear rules about data use and patient consent must be established and communicated transparently, ensuring that patients are fully aware of how their information is utilized by AI systems. Compliance with federal regulations like HIPAA and international privacy laws is non-negotiable.

5.4 Cultivating Human-AI Collaboration and Training

Effective integration of AI in triage relies on fostering seamless collaboration between human clinicians and AI systems, rather than allowing AI to replace human judgment. This requires implementing workflows that facilitate human-AI interaction with built-in safety checks, ensuring that final decisions always rest with trained medical professionals. Comprehensive training programs are vital to educate clinicians on AI ethics, potential pitfalls, and appropriate use of AI tools. This includes integrating AI literacy into medical education and continuing professional development, empowering clinicians to question and override AI recommendations when appropriate. Engaging healthcare staff, including emergency physicians, nurses, and technicians, in the AI development and testing processes from the beginning is crucial to ensure AI explanations align with clinical reasoning patterns and build confidence.

5.5 Developing Adaptive Regulatory Frameworks

The rapid evolution of AI necessitates adaptive and comprehensive regulatory frameworks that can keep pace with technological advancements. These frameworks should establish clear accountability for AI-driven decisions, outlining responsibilities for developers, deployers, and healthcare providers. Regulations should mandate transparency in AI systems, requiring clear documentation of development processes, data used for training, and decision logic. Furthermore, they should encourage and incentivize the development of AI that is inherently interpretable and fair, rather than relying solely on post-hoc explanations. Collaborative efforts among clinicians, AI researchers, ethicists, legal experts, and policymakers are essential to develop and refine these frameworks, ensuring that AI deployment in healthcare aligns with ethical principles and patient well-being.

Conclusion

The integration of Artificial Intelligence into medical triage holds immense potential to revolutionize emergency care, offering benefits such as improved patient prioritization, reduced wait times, and enhanced diagnostic support. However, realizing these benefits responsibly hinges on addressing the inherent opacity of "black box" AI models. Explainable AI (XAI) provides the critical bridge, transforming opaque decisions into transparent, understandable insights.

By adhering to core principles of interpretability, transparency, traceability, and justifiability, XAI fosters trust among clinicians and patients alike. Techniques like LIME, SHAP, and the emerging use of Large Language Models for explanation offer pathways to demystify complex AI outputs, enabling healthcare professionals to confidently leverage AI recommendations while maintaining human oversight.

Despite these advancements, significant challenges persist. Algorithmic biases embedded in training data can lead to discriminatory outcomes, demanding proactive mitigation strategies and diverse data representation. Upholding patient data privacy and establishing clear accountability frameworks are paramount, especially given the multi-stakeholder nature of AI development and deployment. Furthermore, the fundamental trade-off between AI performance and interpretability, alongside computational overheads, requires careful consideration and strategic design choices.

Moving forward, the imperative is to prioritize "interpretability by design," ensuring that transparency is a foundational element of AI systems rather than an afterthought. This, coupled with robust bias detection, stringent data governance, continuous human-AI collaboration, and agile regulatory frameworks, will pave the way for a future where AI in triage is not only highly effective but also ethically sound, trustworthy, and truly transparent. The journey towards fully explainable AI in healthcare is complex, but it is an essential one for ensuring equitable, safe, and patient-centered care.

FAQ Section

What is explainable AI in triage?

Explainable AI (XAI) in triage refers to AI systems that provide clear and understandable explanations for their recommendations, making it easier for clinicians to trust and act on the AI's outputs123.

Why is explainability important in AI-driven triage systems?

Explainability is crucial because it enhances clinicians' trust in AI recommendations, allows for better identification and correction of biases, and facilitates ethical and legal accountability123.

What are some strategies to enhance explainability in AI?

Strategies include developing inherently explainable models, using post-hoc explanation methods, and incorporating clinician feedback into the development process283.

How can clinicians be trained to use explainable AI effectively?

Clinicians can be trained through educational programs to interpret AI recommendations, communicate limitations to patients, and understand the ethical considerations of AI use283.

What are the ethical considerations of using AI in healthcare?

Ethical considerations include assigning responsibility for AI-driven decisions, ensuring informed consent, and maintaining patient autonomy and trust123.

How does explainable AI impact patient outcomes?

Explainable AI can improve patient outcomes by enhancing diagnostic accuracy, reducing triage time, and increasing patient satisfaction and trust in the care process123.

What are the challenges of implementing explainable AI in healthcare?

Challenges include balancing explainability with performance, addressing biases in AI systems, and ensuring that AI recommendations align with clinical knowledge and practices123.

How can regulatory frameworks support the use of explainable AI in healthcare?

Regulatory frameworks can support explainable AI by addressing transparency, accountability, and fairness and fostering interdisciplinary collaboration and continuous monitoring123.

What is the role of clinician feedback in developing explainable AI?

Clinician feedback ensures that AI models align with clinical knowledge and practices, making the recommendations more understandable and trustworthy283.

How does explainable AI address the black box problem?

Explainable AI addresses the black box problem by providing precise and understandable explanations for AI recommendations, making it easier for clinicians to trust and act on the AI's outputs123.

Additional Resources

For readers interested in exploring this topic further, here are some reliable sources: