AI Triage for Mental Health: Detecting Subtle Signs and Appropriate Pathways

Explore the transformative role of AI in mental health triage, from detecting subtle signs to guiding appropriate treatment pathways. Learn how AI enhances clinical efficiency and patient outcomes.

The escalating global mental health crisis presents an urgent need for innovative solutions to overcome pervasive barriers to care, including provider shortages, geographical isolation, and social stigma. Artificial intelligence (AI) is emerging as a transformative force in mental healthcare, particularly through its application in triage systems. AI-powered triage involves the sophisticated analysis of diverse patient data to provide preliminary assessments, determine urgency levels, and recommend tailored care pathways. This report explores the mechanisms by which AI detects subtle indicators of mental distress, leveraging advanced machine learning, deep learning, and natural language processing to identify patterns often imperceptible to human observation in traditional settings.

The benefits of AI in this domain are substantial, encompassing enhanced accessibility, improved efficiency in clinical workflows, and greater accuracy in early detection, leading to more timely and personalized interventions. However, the integration of AI is not without significant challenges. Critical considerations include the potential for algorithmic bias, stringent requirements for data privacy and security, the inherent limitations of AI in replicating genuine human empathy, and the evolving landscape of regulatory oversight and accountability. This report argues that AI's optimal role is to augment, rather than replace, human clinical capacity, serving as a force multiplier for mental health professionals. The successful and ethical deployment of AI in mental health triage necessitates a human-centric, responsible approach, fostering collaboration among technologists, clinicians, policymakers, and patients to ensure equitable, safe, and effective solutions for the future of mental healthcare.

II. Introduction to AI Mental Health Triage

Defining AI Triage in Mental Healthcare

AI triage in mental health refers to the strategic application of artificial intelligence algorithms to systematically analyze a wide array of patient data. This process aims to generate preliminary assessments of an individual's mental health status, assign urgency levels, and suggest appropriate care pathways. Data inputs can be highly diverse, encompassing structured information from intake questionnaires and electronic health records (EHRs), as well as unstructured data derived from voice or text analysis. The fundamental purpose of this AI-driven approach is to assist clinicians by providing data-driven insights and recommendations, thereby enhancing the efficiency and effectiveness of the triage process. It is important to note that AI functions as a sophisticated personal assistant, capable of analyzing data, making recommendations, and collecting information on specific topics, rather than operating as an autonomous diagnostic or treatment entity.

The Global Mental Health Crisis and the Role of AI

The global burden of mental disorders is profound and escalating, impacting millions of individuals worldwide and imposing significant social and economic costs. In 2019, approximately 970 million people globally were living with a mental disorder, with mental illnesses projected to cost the global economy around $16 trillion between 2010 and 2030. Despite a steady rise in adults seeking care, access remains severely limited, with nearly 30 million adults in the U.S. alone not receiving any treatment. This crisis is exacerbated by persistent systemic barriers, including geographical isolation, a severe shortage of mental health specialists, strained healthcare resources, and the pervasive social stigma associated with seeking mental health support. For instance, there are only 13 mental health workers per 100,000 people globally, with a stark 40-fold difference between high-income and low-income nations. This leads to an estimated 85% of individuals with mental health issues not receiving necessary treatment.

In response to these critical challenges, AI is rapidly emerging as a vital tool. It offers a tangible pathway to bridge existing care gaps by improving accessibility, enhancing diagnostic efficiency, and optimizing treatment delivery. The deployment of AI is not merely an incremental technological upgrade but a strategic response to fundamental systemic failures in mental healthcare access and efficiency. The aim is to fundamentally restructure service delivery, enabling a more resilient and accessible healthcare infrastructure capable of addressing the crisis at scale.

Goals and Scope of AI Triage: Enhancing Access, Efficiency, and Early Intervention

The overarching objectives of integrating AI into mental health triage are multifaceted, focusing on enhancing the entire care continuum. A primary goal is to improve the efficiency of clinical workflows and address the critical shortage of mental health providers. By automating parts of the initial assessment process, AI tools can free up valuable clinician time, allowing clinics to process more patients, potentially reducing wait times, and improving overall clinic flow. This streamlined approach can also alleviate administrative burdens and clinician burnout.

Furthermore, AI significantly enhances early detection capabilities. By analyzing subtle data patterns that a human reviewer might miss during a quick initial interaction, AI can flag individuals at higher risk of certain conditions, facilitating earlier intervention. This proactive approach can lead to better patient outcomes and potentially reduce the need for more intensive, costly interventions later. AI also plays a role in automating administrative tasks for professionals, opening up more time for direct patient interaction.

A consistent theme across various discussions is that AI is intended to augment, rather than replace, human clinical capacity. This perspective is crucial for fostering adoption and ethical integration within the healthcare community. The emphasis on AI assisting clinicians, not making independent diagnoses or treatment plans, acknowledges the irreplaceable human elements of empathy, nuanced judgment, and the therapeutic relationship in mental health care. The most effective future models are therefore anticipated to be hybrid, where human clinicians retain ultimate decision-making authority, leveraging AI for efficiency, data synthesis, and preliminary assessments, thereby optimizing their time for direct, empathetic patient care.

III. Mechanisms of AI-Powered Detection

Core AI Technologies: Machine Learning, Deep Learning, Natural Language Processing

The efficacy of AI-powered mental health detection is rooted in sophisticated computational techniques. Machine Learning (ML) algorithms form the bedrock, enabling systems to identify subtle indicators of mental health conditions through pattern recognition, classification, regression, and predictive modeling. These algorithms learn from vast datasets to make informed predictions about an individual's mental state.

Deep Learning (DL), a specialized subset of ML, further amplifies these capabilities, particularly in processing complex, unstructured data such as raw audio and medical images. DL networks, including Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) like Long Short-Term Memory (LSTM), are adept at identifying intricate features and patterns that might be challenging for traditional ML models. For instance, LSTM frameworks have demonstrated superior performance in uncovering complex patterns in voice recordings for anxiety and depression detection.

Natural Language Processing (NLP) is indispensable for analyzing human language, both spoken and written. NLP tools extract significant concepts from patient interactions, medical records, and therapy notes. This technology enables sentiment analysis, identifying linguistic distinctions associated with psychological conditions, such as alterations in speech linked with schizophrenia or bipolar disorder. NLP is also crucial for assessing patient responses during therapy and adjusting treatment plans. Beyond these, Computer Vision is utilized for analyzing imaging data and understanding non-verbal cues like facial expressions, gestures, and eye gaze.

Specific algorithms commonly employed include Logistic Regression, Support Vector Machines (SVM), Decision Trees, Random Forest, K-Nearest Neighbors (KNNs), Bagging, Boosting, and Extreme Gradient Boosting (XGBoost) for ML tasks. In DL, models such as Deep Belief Networks (DBN), Auto-encoders (AE), and advanced Transformer-based models (e.g., GPT, BERT) are also leveraged for their generative and comprehension capabilities in nuanced therapeutic dialogue.

Diverse Data Sources for Analysis

AI systems in mental health leverage a wide array of data sources to construct comprehensive and dynamic patient profiles. Structured data inputs are fundamental, including information from electronic health records (EHRs), patient intake questionnaires, and clinical notes. The secure integration of AI solutions with existing EMR systems is a crucial first step for effective data management and analysis.

Beyond structured data, unstructured information is critically important. This includes analysis of voice and speech patterns, such as tone, pitch, speed, and patterns of silence, which can reveal emotional states. Facial expressions and other behavioral cues, detectable through computer vision, also provide valuable insights into a person's mental state.

Physiological indicators gathered from wearable devices, such as heart rate variability, skin conductance, sleep patterns, and physical activity levels, offer continuous, real-time insights into an individual's well-being. Furthermore, digital footprints from social media activity and smartphone usage patterns (e.g., typing speed, browsing habits) are increasingly leveraged to understand day-to-day activities and challenges outside of traditional clinical settings. Other crucial data types include genetic information, lifestyle factors, and responses from structured surveys.

The collection and analysis of such a diverse and voluminous array of data sources represent a fundamental shift towards what can be termed a "holistic digital phenotype" in mental health assessment. This goes beyond the episodic, subjective evaluations of traditional clinical encounters. By continuously monitoring and integrating disparate, often subtle, and continuously generated data streams, AI can construct a comprehensive, dynamic, and objective digital profile of an individual's mental state. This capability provides insights into an individual's day-to-day activities and challenges that are otherwise inaccessible, enabling a more nuanced and continuous understanding of mental well-being.

Analytical Techniques for Subtle Sign Identification

AI employs a variety of sophisticated analytical techniques to identify subtle signs of mental distress. Predictive analytics is a key methodology, utilizing past and present data to estimate the likelihood of developing mental health disorders. This involves spotting complex patterns that might not be obvious to human reviewers, such as correlations between genetic information, lifestyle factors, and past medical history.

Diagnostic algorithms, frequently powered by machine learning, process diverse data from patient interviews, psychological tests, and EHRs to suggest potential disorders and risk levels. These algorithms can analyze voice and speech patterns, body movements, and even writing to assess the presence of affective or psychiatric disorders.

Natural Language Processing (NLP) is particularly effective for sentiment analysis, extracting emotional cues and linguistic markers from both text and speech. It can recognize subtle distinctions in language associated with psychological conditions, such as alterations of speech linked with schizophrenia or bipolar disorder. Behavioral analysis focuses on identifying specific patterns in physical actions, such as idle sitting, nail biting, knuckle cracking, and hands tapping, which can be monitored using sensors and analyzed with deep learning algorithms. Computer vision, as mentioned, analyzes visual cues like facial expressions.

The reliance on diverse, often subtle, data sources and advanced ML, DL, and NLP techniques signifies a growing capability to detect mental health issues before overt clinical symptoms fully manifest. This represents a pivotal shift from reactive diagnosis to proactive prevention. AI achieves this by analyzing data patterns that might be subtle to a human reviewer during a quick initial interaction , identifying early signs of mental health conditions through continuous monitoring of speech patterns, social media activity, or wearable data , and flagging individuals at higher risk to facilitate earlier intervention. This capability allows for intervention based on subtle, data-driven risk signals, moving towards a truly preventative model of mental health support.

IV. Detecting Subtle Signs of Mental Distress

Early Warning Indicators Identified by AI

AI systems are meticulously trained to recognize a broad spectrum of subtle indicators that may signal nascent or worsening mental distress. These indicators span behavioral, linguistic, physiological, and digital footprint domains.

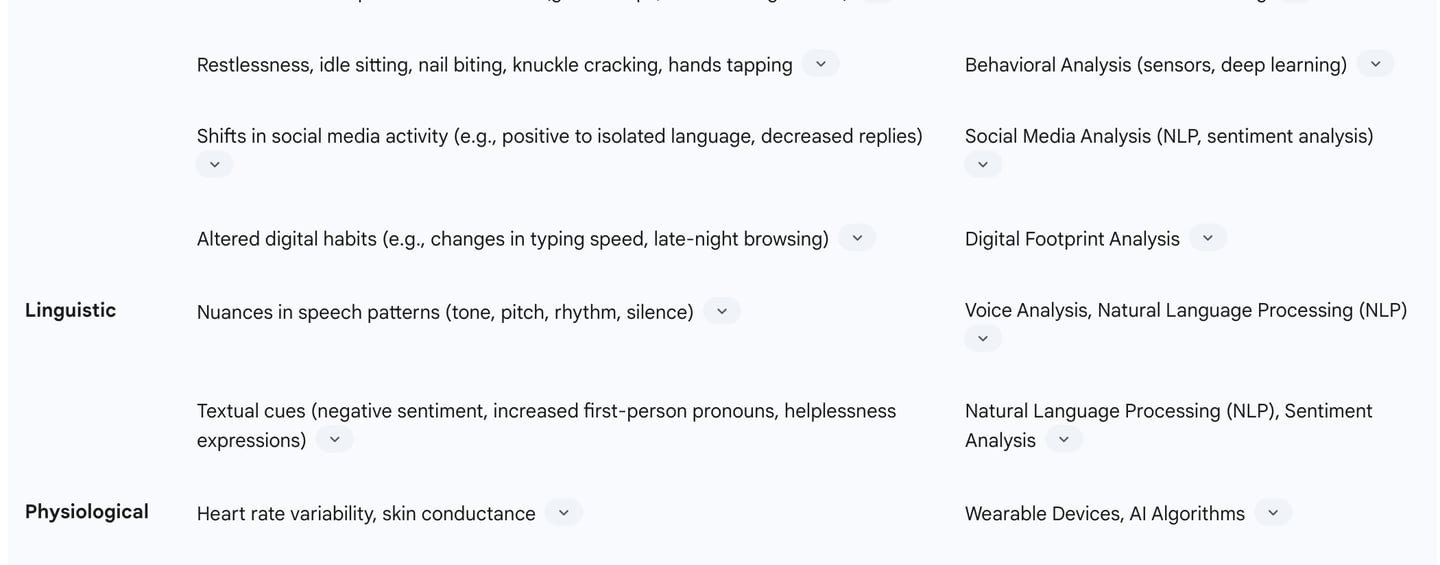

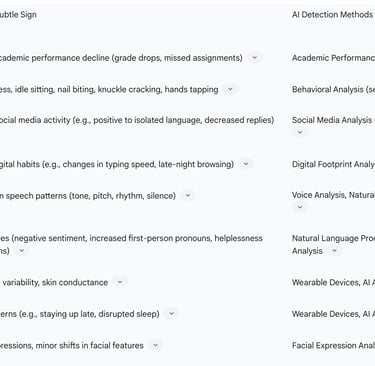

Behavioral Changes are a key area of detection. AI can identify anomalies such as sudden declines in academic performance, including drops in grades, increased missed assignments, or changes in attendance patterns. In broader contexts, AI monitors shifts in social media activity, such as a transition from positive to isolated language or a decrease in replies to friends. Altered digital habits, including changes in typing speed, late-night browsing, or rewatching the same videos, can also serve as early flags. More specific physical behaviors like restlessness, idle sitting, nail biting, knuckle cracking, and hands tapping are also detectable through AI algorithms.

Linguistic Markers, identified through Natural Language Processing (NLP), offer profound insights. AI analyzes nuances in speech patterns, including tone, pitch, rhythm, and patterns of silence, which can indicate emotional distress. Textual cues from messages, social media posts, or chat logs, such as negative sentiment, increased use of first-person pronouns, or expressions of helplessness, are also crucial indicators. AI systems trained in sentiment analysis can spot shifts from lighthearted language to darker, more withdrawn speech, for example, from "I feel great today" to "Nothing matters anymore".

Physiological Indicators from wearable devices provide objective data. AI analyzes variations in heart rate variability, skin conductance, and sleep patterns (e.g., staying up late, disrupted sleep), which are associated with stress responses and can signal depression or anxiety symptoms.

The effectiveness of AI in detecting these subtle signs is directly tied to its ability to process and correlate multimodal, continuous data that is often imperceptible or overwhelming for human clinicians during traditional, episodic interactions. Human clinicians, in a typical brief interaction, cannot continuously monitor or synthesize such a vast and varied stream of subtle cues. AI's strength lies in its capacity for continuous, objective data collection and its algorithmic ability to identify complex, non-linear relationships and temporal shifts that would be imperceptible to human observation. This capability moves mental health assessment beyond subjective, snapshot evaluations to a more objective, longitudinal, and comprehensive understanding of an individual's mental state, enabling detection before acute symptoms or crises emerge.

Table: Common Subtle Mental Health Indicators and AI Detection Methods

Case Studies in Subtle Detection

Real-world applications and research studies consistently demonstrate AI's robust capability in detecting subtle signs across a spectrum of mental health conditions. For depression and anxiety, AI models have achieved high accuracy rates, ranging from 70% to 83%, by analyzing vocal patterns in verbal fluency tests, voice tone, facial expressions, and keystroke dynamics. One study even reported up to 80% accuracy in detecting depression through voice tone, facial expressions, and keystroke dynamics. Limbic's AI-powered psychological assessment and triage tools, for instance, have achieved a 93% accuracy rate in diagnosing eight common mental illnesses, including PTSD and anxiety, leading to fewer treatment adjustments and clinician time savings.

In the critical area of suicidality prediction, AI models have shown accuracies up to 92% by analyzing user behavior and linguistic markers, often incorporating data from electronic health records and social media posts. This capability facilitates proactive intervention, potentially saving lives.

AI also contributes significantly to the early detection of complex conditions like schizophrenia and bipolar disorder. This is achieved through the analysis of speech patterns, brain scans (fMRI, sMRI), and comprehensive EHR data. For example, a classifier based on structural MRI data could predict psychosis onset in adolescents with 73% accuracy in an independent validation dataset. Winterlight Labs platform, for instance, uses speech analysis and NLP to detect early signs of dementia and other cognitive impairments.

Furthermore, AI systems are actively being developed and tested to monitor student behavior in academic settings for early signs of stress and anxiety, flagging anomalies like sudden grade drops, restlessness, or negative verbal cues for teachers or counselors.

While AI demonstrates high accuracy in detection and prediction of mental health indicators, a crucial distinction is consistently made between AI's capabilities and the human role in diagnosis. AI assists clinicians by providing preliminary assessments and identifying potential issues, but clinical diagnosis and treatment plans require human expertise and empathy. For example, voice analysis for anxiety and depression is viewed as a complementary screening method, not a standalone diagnostic tool. This consistent message indicates that AI provides powerful probabilistic signals and risk assessments, but the final diagnostic judgment, which requires nuanced contextual understanding, empathy, clinical experience, and ethical considerations, remains firmly within the human domain. This boundary is paramount for responsible implementation, ensuring patient safety and maintaining the integrity of the therapeutic process.

V. Navigating Appropriate Care Pathways

AI-Assisted Triage for Urgency and Referrals

AI-powered triage systems are designed to optimize the process of guiding patients to the most suitable care. These systems provide preliminary assessments of a patient's mental health status and recommend urgency levels, distinguishing between those needing immediate attention versus those who can be managed with routine follow-ups or referrals. This capability is particularly valuable in settings with limited resources, such as rural clinics, where overburdened primary care providers can use AI to prioritize care efficiently.

By automating parts of the initial assessment process, AI frees up valuable clinician time, allowing clinics to process more patients, potentially reducing wait times and improving overall clinic flow. For example, at Kingston Health Sciences Centre (KHSC), an AI-assisted triage system helped cut appointment wait times by more than 50% between 2023 and 2024. The Online Psychotherapy Tool (OPTT) at KHSC uses machine learning to assess patient needs and recommend appropriate levels of care, immediately flagging crisis situations for urgent intervention. Similarly, an AI-enabled self-referral tool in NHS Talking Therapies services led to a 15% increase in total referrals, significantly larger than the baseline increase observed with traditional methods.

AI also revolutionizes patient referral management by matching individuals with the right specialists based on clinical need, automating intake and communication, and reducing manual errors. Modern systems leverage machine learning algorithms to predict if referrals will be "clean or rejected," and Natural Language Processing (NLP) to extract and interpret data from referral documents, cutting processing time drastically (e.g., from 10 minutes to 10 seconds). These technologies have demonstrated significant success in improving healthcare access for underserved populations, with one study reporting a 179% increase in nonbinary patients and a 29% increase in ethnic minority individuals accessing care through AI-facilitated referrals. Limbic AI's "Limbic Access" tool, for instance, has shown a 15% increase in referrals, with the largest increases in minority groups, and a 5-day reduction in patient waitlist times.

Personalized Interventions and Treatment Plans

AI plays a crucial role in moving mental healthcare away from a one-size-fits-all model towards highly personalized interventions and treatment plans. By analyzing vast amounts of individual user data, including symptoms, history, lifestyle, and even genetic information, AI can recommend customized therapy techniques and optimize medication management. This tailored approach aims to improve patient outcomes by ensuring compatibility between patient needs and provider expertise.

AI can drive computerized cognitive-behavioral therapy (CBT) programs, which focus on changing thinking patterns and behavioral patterns. AI-powered chatbots and apps, such as Woebot, Wysa, and Youper, are widely used in this context, providing evidence-based support and self-care techniques drawn from CBT, Dialectical Behavior Therapy (DBT), mindfulness, and positive psychology. These conversational AI tools offer real-time assistance for anxiety and depression, recognizing distress patterns and providing CBT-based responses.

Furthermore, AI enables real-time feedback and progress tracking for patients, allowing healthcare professionals to continually adjust treatment plans based on the patient's unique response, even outside of therapy sessions. Spring Health, for example, uses AI for guided pre-appointment intake, session summaries, takeaways, and automated journaling, reinforcing insights and encouraging follow-through between sessions. AI can also advance sentiment analysis in mental health care, refining therapy approaches over time to enhance patient engagement.

AI in Crisis Management and Continuous Support

AI systems are increasingly integrated into crisis management protocols and provide continuous support to individuals. They can immediately flag crisis situations for urgent intervention, as demonstrated by the OPTT platform. Some AI counseling tools, like the Trevor Project's Riley, provide crisis support and resources for LGBTQ youth, while predictive analytics tools analyze voice patterns to identify signs of emotional distress. AI-powered applications are also used in suicide prevention to identify early signs of suicidal thoughts, offering timely interventions and resources, directing users to hotlines and support systems.

The 24/7 availability of AI-driven mental health assistants, such as chatbots and trackers, allows patients to remain engaged in their care around the clock, whether by tracking symptoms or talking to a virtual support assistant. These tools can offer real-time emotional analysis and guidance, helping patients manage high emotional states. Spring Health's "in-the-moment conversations" provide 24/7 support for members to work through emotions or access guided support tools, with the ability to escalate to a licensed clinician if needed. Kana Health also offers crisis management support for therapists.

However, the constant availability of AI also presents risks. There is a concern that users might become overly dependent on AI, potentially increasing social isolation or leading to a diminished ability to deal with conflicts. Unlike human relationships, interactions with AI are not symmetrical or mutual. Some worry that AI's availability could justify the removal of current mental health care services or diminish the therapist's monitoring role, exacerbating healthcare problems, such as increasing the risk of incorrect self-diagnosis. Furthermore, there are serious concerns about AI's ability to respond adequately to crises and suicidality, with instances of chatbots providing inappropriate or harmful advice. This underscores the critical need for human oversight and clear protocols for escalating to human intervention in high-risk scenarios.

VI. Benefits of AI in Mental Health Triage

Enhanced Accessibility and Reduced Barriers

AI-powered mental health tools significantly enhance accessibility to care, addressing long-standing barriers such as geographical isolation, limited specialist availability, high costs, and social stigma. Many regions, particularly rural areas, have few or no mental health professionals. AI tools lower these barriers by providing remote, 24/7 access to support, regardless of location or economic constraints. This is particularly impactful in developing markets and for non-native speakers, as generative AI can offer care in a patient's native language.

By automating initial screening and assessment processes, AI reduces the burden on limited staff, allowing clinics to evaluate more patients and potentially shorten wait times for specialist referrals. This has led to substantial improvements in access; for example, an AI-enabled self-referral tool increased total referrals by 15% in NHS Talking Therapies services. Furthermore, AI-facilitated referrals have shown a remarkable increase in access for underserved populations, including a 179% increase in nonbinary patients and a 29% increase in ethnic minority individuals accessing care. Limbic AI, for instance, has seen a 15% increase in referrals, with the largest increases in minority groups, and a 5-day reduction in patient waitlist times. The anonymity offered by AI chatbots also helps reduce the stigma associated with seeking mental health support, making it easier for individuals to take the first step.

Improved Efficiency and Workflow Optimization

AI significantly enhances the efficiency of mental health services and optimizes clinical workflows. By automating parts of the initial assessment process, AI tools free up valuable clinician time, allowing clinics to process more patients needing evaluation, potentially reducing wait times and improving overall clinic flow. This streamlined triage process can reduce administrative burdens and clinician burnout.

AI notetakers, for example, assist providers with documenting patient visits, capturing conversations more quickly and accurately, and allowing clinicians to be more present with patients instead of focusing on jotting down notes. AI can also organize care plans, track medication changes, session attendance, and user feedback, building a clearer picture of what works for each person over time.

In referral systems, AI revolutionizes management by predicting "clean or rejected" referrals, extracting and interpreting data from referral documents using NLP, and automating tasks like creating patient charts, verifying insurance, and scheduling appointments. This drastically cuts processing time, from an average of 10 minutes to just 10 seconds in some cases. Montage Health, for instance, reduced its referral processing time from 23 days to 1.5 days after implementing AI. This reduction in administrative workload allows healthcare teams to focus more on direct patient care. Limbic AI reports a 50% reduction in assessment times, handing back up to 30 minutes of clinical time per assessment to staff.

Greater Accuracy and Early Intervention

AI's ability to analyze vast datasets and identify complex patterns provides objective, data-driven insights that can improve the accuracy of mental health diagnosis and facilitate early intervention. By analyzing data patterns that might be subtle to a human reviewer during a quick initial interaction, AI can potentially flag individuals at higher risk of certain conditions, facilitating earlier intervention. This leads to better patient outcomes and can reduce the need for more intensive, costly interventions down the line.

AI models have demonstrated high accuracy rates in detecting various mental illnesses. For instance, AI tools can detect depression with up to 80% accuracy by evaluating voice tone, facial expressions, and keystroke dynamics. In a study on anxiety and depression comorbidity, AI models achieved accuracy ratings ranging from 70% to 83% from voice recordings. For suicide attempts, AI models have achieved up to 92% accuracy in prediction. Research by IBM and the University of California revealed that AI has the power to detect various mental illnesses with an accuracy rate between 63% and 92%.

The consistency offered by AI tools ensures uniform evaluations, mitigating variability and enhancing diagnostic accuracy across different practitioners and environments. By identifying risk patterns and early signs, AI enables timely treatment, which is crucial for assisting patients better and achieving positive treatment results. While AI provides powerful probabilistic signals and risk assessments, the final diagnostic judgment, which requires nuanced contextual understanding, empathy, clinical experience, and ethical considerations, remains firmly within the human domain. This distinction is paramount for responsible implementation, ensuring patient safety and maintaining the integrity of the therapeutic process.

VII. Challenges and Ethical Considerations

Algorithmic Bias and Fairness

A significant challenge in the deployment of AI in mental health triage is the potential for algorithmic bias, which can perpetuate and exacerbate existing healthcare disparities. These biases typically stem from three primary sources: biased training data, problematic algorithm design, and the context of implementation.

AI algorithms learn patterns from historical healthcare data, which often reflects existing societal inequities. If training data lacks diversity or contains historical biases (e.g., predominantly from white men), these patterns become encoded in the algorithm's behavior, leading to worse performance and underestimation for underrepresented groups. This can result in biased answers and less effective support for individuals from diverse racial, cultural, or socioeconomic backgrounds. For instance, a study found that AI-based health tools recommended more advanced diagnostic tests for high-income individuals and basic or no tests for low-income groups with the same symptoms. Similarly, individuals identifying as LGBTQIA+ were recommended mental health assessments six to seven times more frequently than clinically indicated.

Problematic algorithm design can also introduce bias through proxy variables (e.g., zip code as a proxy for race), ill-defined labels, or optimization goals that prioritize cost efficiency over health equity. The "black box" nature of many AI systems, where doctors and patients do not fully understand how decisions are made, further complicates the identification and mitigation of bias. A Stanford study revealed that AI therapy chatbots exhibited increased stigma towards conditions like alcohol dependence and schizophrenia compared to depression, a bias consistent across different AI models. This stigmatization can be detrimental, potentially causing patients to discontinue essential mental health care.

To promote fairness, it is essential to diversify training data, explore diversity in algorithm design, systematically audit for discriminatory biases, and implement transparency and accountability measures. Including diverse stakeholders, such as mental health professionals and marginalized communities, in the design and evaluation of AI tools is crucial to reduce bias and ensure ethical, effective, and equitable interventions.

Data Privacy and Security

The highly sensitive nature of mental health data presents significant privacy and security concerns for AI-driven applications. This data includes personal thoughts, feelings, medical records, therapy session transcripts, behavioral patterns, and real-time emotional states, all of which must remain confidential. The risk of data breaches, unauthorized sharing, or misuse by third parties is a critical concern, as such incidents can severely harm patients and erode trust in healthcare providers and AI tools. In 2023, an average healthcare breach cost almost $11 million, with over half originating from careless or uninformed staff.

Robust safeguards are essential to protect patient confidentiality. This includes implementing strong data governance practices such as Role-Based Access Controls (RBAC) to restrict access to sensitive data, and comprehensive encryption for data both at rest and in transit. Regular risk assessments are necessary to identify potential issues and ensure compliance with regulations like HIPAA (Health Insurance Portability and Accountability Act) in the U.S. and GDPR (General Data Protection Regulation) in the EU. Therapists must establish clear Business Associate Agreements (BAAs) with AI vendors outlining privacy responsibilities.

Prioritizing patient consent and data transparency is paramount. Patients must provide informed consent, fully understanding how AI will use their data, the potential risks, and their right to decline AI involvement or revoke consent at any time. This also includes transparent data ownership policies and the ability for patients to opt out or delete their data. Designing AI systems with privacy-by-design principles, collecting only necessary data, and using identity-hiding techniques are crucial. Continuous training and monitoring of staff on privacy regulations and cybersecurity are also vital, as internal staff are a significant source of data leaks.

Limitations in Emotional Understanding and Human Connection

A fundamental limitation of AI in mental health is its inability to fully replicate the empathy, understanding, and genuine emotional connection provided by human therapists. While AI-driven chatbots can simulate empathy using sentiment analysis and NLP, they struggle with the nuance, cultural variations in emotional expression, and the inherent unpredictability of human emotions. This can lead to misinterpretations or inadequate responses, particularly in high-risk situations. For example, an AI chatbot might fail to recognize suicidal intent due to indirect language, providing generic advice instead of escalating for immediate human intervention.

The risk of over-immersion or over-reliance on AI tools is also a concern, potentially leading to increased social isolation or avoidance of seeking help from human professionals. Unlike human relationships, interactions with AI are not symmetrical or mutual, and users might favor AI due to its consistent positivity and constant availability, which could lead to a loss of personal contacts and loneliness.

It is consistently emphasized that AI is a tool to support health professionals, not to replace them. Clinical diagnosis and treatment require human expertise, empathy, and the ability to interpret subtle cues and adjust approaches in real-time, which AI, operating based on algorithms, cannot fully replicate. The therapeutic alliance, a crucial component of effective therapy, relies on genuine human connection that AI cannot provide. Therefore, maintaining an optimal balance between AI involvement and human oversight is crucial to ensure that AI augments rather than diminishes the quality of care.

Regulatory Gaps and Accountability

The rapid advancement of AI in healthcare has outpaced the development of comprehensive regulatory frameworks, leading to a lack of universal standards for evaluating the safety and efficacy of AI-driven mental health tools. In the U.S., most mental health apps are classified as low risk and fall under "enforcement discretion" by the FDA, meaning they do not require review or certification. However, FDA approval is mandated for digital therapeutics that require clearance for reimbursement, indicating a growing need for more stringent oversight.

The absence of robust regulation raises concerns about misuse and the potential for harm, particularly given the sensitive nature of mental health interventions. There is a critical need for national post-market surveillance programs to assess risks and benefits in real-world settings, identify apps whose clinical trial results do not translate effectively, detect rare adverse effects, and monitor the impact of feature changes. Building such an oversight system is challenging due to the sheer volume of mental health-related apps (estimated at 20,000) and the rapid evolution of software, which can quickly render usability and risk data outdated.

Furthermore, questions of accountability arise when AI systems provide harmful advice or fail to prevent a crisis. It can be difficult to determine who is responsible: the developer, the clinician, or the user. Clear rules about responsibility are necessary to protect patient rights and guide legal actions. Healthcare leaders using AI must set clear rules about who is responsible, and contracts with AI vendors must specify how errors are handled, how problems are reported, and plans for resolution. Continuous monitoring of AI performance in clinics is also essential to identify and rectify mistakes promptly. Governments and international organizations must collaborate to establish clear guidelines for AI-based mental health apps, ensuring regular audits, data protection, and accountability.

VIII. Real-world Applications and Initiatives

Leading AI Mental Health Companies and Platforms

Several innovative companies and platforms are at the forefront of integrating AI into mental healthcare, offering diverse solutions to enhance accessibility, efficiency, and personalized support.

Woebot, Wysa, and Youper are prominent examples of AI-powered chatbots and virtual assistants that provide direct therapeutic support. These platforms leverage Natural Language Processing and machine learning to engage users in text-based conversations, offering evidence-based support and self-care techniques rooted in Cognitive Behavioral Therapy (CBT), Dialectical Behavior Therapy (DBT), and mindfulness. They provide 24/7 access, anonymity, and a judgment-free space, making mental health support more accessible and less intimidating for many users. Wysa, for instance, has guided over 500 million conversations and is used by millions globally, with 91% of users finding it helpful.

Limbic AI focuses on clinical AI solutions to improve efficiency and outcomes across the mental healthcare continuum. Their "Limbic Access" tool streamlines patient intake and triage, reducing assessment times by 50% and improving diagnostic accuracy. "Limbic Care" acts as an AI companion for patient support between sessions, integrating with treatment plans and providing on-demand conversational support. Limbic reports a 15% increase in referrals, particularly among minority groups, and a 5-day reduction in patient waitlist times.

Spring Health operates a continuous care model, integrating AI to reduce friction, personalize care, and improve outcomes while maintaining human connection. Their AI-powered features include guided pre-appointment intake, session summaries with takeaways and automated journaling, and "in-the-moment conversations" for real-time support between sessions. Spring Health emphasizes a responsible AI approach, with 95% user satisfaction and zero major safety concerns reported due to real-time escalation protocols to licensed clinicians.

Kana Health offers an "AI co-pilot" designed to assist licensed therapists. It helps therapists quickly understand client situations and symptoms for faster triage, provides AI-based recommendations for accurate diagnoses and treatment plans, and automates client check-ins for remote tracking of progress. Kana Health aims to improve clinical efficacy, break down stigma, facilitate early detection, and enhance accessibility and affordability.

Other notable innovators include Ellipsis Health, pioneering voice-based mental health assessments through vocal biomarkers for real-time early identification of mental illness ; and Upheal, which tackles administrative burden by automating tasks like progress notes for mental health professionals.

Key Research Institutions and Collaborations

Leading academic and research institutions are actively driving advancements in AI for mental health through dedicated centers and strategic collaborations.

SUNY Downstate Health Sciences University has launched the Global Center for AI, Society, and Mental Health (GCAISMH). This collaborative initiative, with partners like the University at Albany and the Health Innovation Exchange, focuses on developing AI-driven tools for preventing, diagnosing, and treating mental health and brain disorders, particularly in underserved communities. The center's work includes using generative AI models, developing "digital twins" of the brain for personalized care, and building safe, ethical, and inclusive AI tools. GCAISMH is building a global network of partners, including tech and AI leaders like IBM, Google, Microsoft AI for Health, and NVIDIA, as well as humanitarian organizations and universities worldwide (e.g., Yale University, University of California, San Diego).

Stanford Medicine's Department of Psychiatry and Behavioral Sciences hosts the Artificial Intelligence for Mental Health (AI4MH) initiative. Its primary goal is to transform the research, diagnosis, and treatment of psychiatric and behavioral disorders through the creation and application of responsible AI. AI4MH focuses on innovation, translating AI into mental health settings, fostering collaboration between AI scientists and mental health experts, and educating professionals on responsible AI utilization.

These institutions and their collaborations are critical for expanding datasets, enhancing research capabilities, and facilitating the application of AI tools in real-world mental health settings, especially in communities with limited resources.

Pilot Programs and Case Studies in Clinics/Hospitals

Real-world pilot programs and case studies demonstrate the practical impact of AI in mental health triage within clinical and hospital settings.

At Kingston Health Sciences Centre (KHSC), an AI-assisted triage system, the Online Psychotherapy Tool (OPTT), was introduced in their Mental Health and Addiction Care program. This system uses machine learning to assess patient needs and recommend appropriate care levels, immediately flagging crisis situations for urgent intervention. Between 2023 and 2024, this initiative successfully cut appointment wait times by more than 50%. The OPTT also connects patients with diagnosis- and needs-based online therapy modules, with nursing staff providing support while patients await their first appointment, thereby lowering the risk of crisis.

In England, a multi-site observational study across 28 NHS Talking Therapies services found that an AI-enabled self-referral tool led to a 15% increase in total referrals. This increase was significantly larger than the baseline observed in matched services using traditional self-referral methods, demonstrating AI's potential to enhance accessibility, particularly for minority groups.

Beyond mental health, AI has shown efficacy in broader behavioral health screenings within hospitals. A study released by the National Institutes of Health found that an AI screening tool was as effective as healthcare providers in identifying hospitalized adults at risk for opioid-use disorder and referring them to inpatient addiction specialists.

Furthermore, Augmented Reality (AR), enhanced by AI, is being piloted for psychiatric treatment, particularly for exposure therapy in trauma and anxiety. This technology simulates diverse social and occupational environments, allowing patients to practice real-life interactions and improve their social and occupational functioning. For individuals with PTSD who become homebound due to avoidance, AR allows them to confront feared scenarios in a controlled yet immersive environment, leading to functional gains and a return to real-world activities. This transdiagnostic potential extends to conditions like autism and chronic mental illness for social skills training.

These pilot programs and case studies underscore AI's practical utility in streamlining processes, improving access, and delivering targeted interventions within diverse clinical settings.

IX. Future Outlook and Recommendations

Emerging Trends in AI for Mental Health

The trajectory of AI in mental health is characterized by several promising emerging trends aimed at enhancing the depth, personalization, and reach of care. A key area of development is the creation of more emotionally intelligent AI tools capable of real-time emotional analysis and adaptive treatment. This evolution seeks to bridge the current gap in AI's ability to truly understand and respond to human emotions with genuine sensitivity.

Further integration with telehealth platforms and virtual/augmented reality (VR/AR) therapy is anticipated. AR, for instance, is already being used to simulate real-world environments for exposure therapy, allowing patients to practice social and occupational interactions, and its wireless design enables telemedicine access for geographically isolated patients. The future envisions AI generating entire scenes, characters, and events based on a clinician's description, tailored to individual patient needs.

The development of "digital twins" of the brain is another cutting-edge trend, aiming to personalize care using wearable and clinical data. This involves creating highly individualized computational models that can predict how a patient might respond to different interventions, moving towards precision mental health.

Given increasing concerns about data privacy, there is a growing trend towards privacy-centric AI models. Techniques like federated learning, which allow analysis of behavioral data directly on users' devices without central server transfer, are gaining traction to minimize sharing of personally identifiable information. This approach not only helps meet regulatory requirements but also builds trust with users. Concurrently, there is a greater emphasis on developing fair and inclusive algorithms, with new tools being created to identify and reduce bias in AI training data, ensuring equitable predictions across diverse populations.

Responsible Development and Implementation Strategies

The transformative potential of AI in mental health hinges on its responsible development and implementation. This requires adherence to several critical principles:

Prioritizing Human Oversight and Judgment: AI should always serve as a supportive tool, augmenting clinical capacity rather than replacing human mental health professionals. Clinicians must maintain ultimate decision-making authority, critically evaluating AI-generated content and suggestions before incorporating them into patient records or treatment plans.

Robust Data Privacy and Security Measures: Given the highly sensitive nature of mental health data, stringent safeguards are non-negotiable. This includes implementing strong data governance with Role-Based Access Controls (RBAC), comprehensive encryption for data at rest and in transit, and regular risk assessments to ensure compliance with regulations like HIPAA and GDPR. Business Associate Agreements (BAAs) with AI vendors are crucial to define privacy responsibilities. Adopting privacy-by-design principles from the outset, collecting only necessary data, and employing identity-hiding techniques are also essential.

Addressing Algorithmic Bias: To mitigate bias, it is imperative to diversify training data to accurately reflect diverse populations, explore diversity in algorithm design, and systematically perform audits for discriminatory biases. Including diverse stakeholders, including mental health professionals with cultural expertise and marginalized communities, in the design and evaluation process is critical for creating culturally sensitive and equitable solutions.

Clear Informed Consent and Transparency: Patients must be fully informed about how AI is used in their care, including potential benefits, risks, data collection practices, and the limitations of AI. Consent should be explicit, and patients must retain the right to decline AI-driven care or revoke consent at any time. Transparency in AI models, including understanding data sources and usage methods, fosters trust with both patients and staff.

Continuous Monitoring and Reassessment: Therapists should continuously monitor and critically challenge AI outputs for inaccuracies and biases, intervening promptly if the AI produces incorrect, incomplete, or inappropriate content or recommendations. Regular interaction monitoring helps assess the effectiveness and safety of patient-AI exchanges.

Establishing Clear Accountability Frameworks: Clear rules about responsibility are necessary in case of AI failures or harmful advice. Contracts with AI vendors should specify who handles errors and how problems are reported and resolved.

Fostering Collaboration: Successful integration requires collaboration among researchers, developers, clinicians, policymakers, and patients to ensure ethical design, validate effectiveness, and facilitate real-world deployment.

X. Conclusions

AI-powered mental health triage stands as a pivotal innovation poised to significantly transform mental healthcare delivery. The analysis demonstrates that AI's capacity to detect subtle signs of mental distress, leveraging multimodal and continuous data streams from EHRs, voice analysis, wearables, and digital footprints, offers an unprecedented opportunity for early detection and proactive intervention. This capability moves mental health assessment beyond subjective, episodic evaluations to a more objective, longitudinal understanding of an individual's well-being. Furthermore, AI systems are proving instrumental in streamlining triage processes, reducing wait times, optimizing resource allocation, and facilitating personalized care pathways, thereby enhancing accessibility and efficiency, particularly in underserved areas.

However, the full realization of AI's promise is contingent upon a rigorous and ethical implementation framework. The inherent limitations of AI in replicating genuine human empathy and the complexities of nuanced emotional understanding necessitate that AI remains a tool to augment, rather than replace, human clinical expertise. Algorithmic bias, stemming from unrepresentative training data or flawed design, poses a significant risk of perpetuating and exacerbating existing healthcare disparities. Paramount importance must be placed on robust data privacy and security measures, informed consent, and transparent data governance to protect highly sensitive patient information. The current regulatory landscape, which lags behind the rapid pace of AI development, underscores the urgent need for clear standards, post-market surveillance, and defined accountability for AI failures.

In conclusion, the optimal future for AI in mental health is a hybrid model where AI acts as a powerful force multiplier for human professionals. By automating routine tasks, providing data-driven insights for early detection, and streamlining administrative processes, AI frees clinicians to focus on the irreplaceable aspects of care: empathetic connection, complex diagnostic judgment, and tailored therapeutic interventions. Achieving this future requires unwavering commitment to ethical development, continuous monitoring, and collaborative efforts among all stakeholders to ensure that AI serves to create a more equitable, accessible, and effective mental healthcare system for all.

Sources used in the report

FAQ Section

Q: What is AI triage in mental health?

A: AI triage in mental health involves using artificial intelligence to detect subtle signs of mental health issues and guide patients towards appropriate treatment pathways.

Q: How does AI enhance clinical efficiency in mental health triage?

AI enhances clinical efficiency by automating initial assessments and referrals, allowing healthcare providers to focus on more complex cases.

Q: What are some challenges in implementing AI in mental health triage?

A: Challenges include the accuracy and reliability of AI-driven diagnoses, data biases, and the need for careful integration with existing healthcare systems.

Q: How does AI detect subtle signs of mental health issues?

A: AI algorithms analyse speech patterns, facial expressions, and written text to identify markers of mental health conditions.

Q: What is the role of AI in personalised treatment pathways?

AI can recommend personalised treatment plans by analyzing a patient's symptoms, medical history, and genetic information.

Q: How has the NHS integrated AI into its mental health services?

A: The NHS has integrated an AI self-referral tool into its Talking Therapies service to streamline the triage process and improve service capacity.

Q: What is the DAISY system, and how does it assist in mental health triage?

A: DAISY is an AI-supported system that automates the ED triage process by capturing subjective and objective data.

Q: How does AI improve the accuracy of mental health diagnoses?

A: AI improves diagnostic accuracy by analysing large datasets and identifying patterns that may go unnoticed by human clinicians.

Q: What are the benefits of using AI in mental health triage?

A: Benefits include enhanced clinical efficiency, early detection of mental health issues, and personalised treatment pathways.

Q: What are some ethical considerations in using AI for mental health triage?

A: Ethical considerations include data privacy, bias in AI algorithms, and the need for transparent and explainable AI decisions.